by Cindy Wu

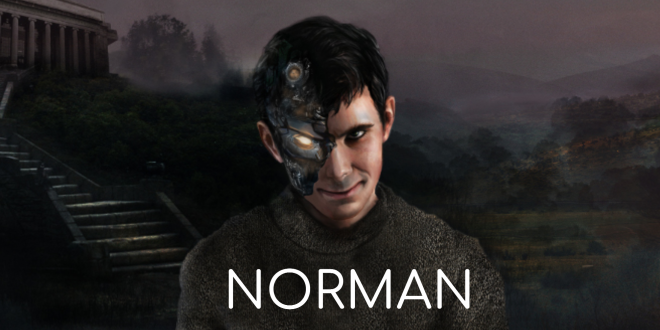

Even though AI is a very promising field, and can achieve a variety of industry automation, accelerate the development of industrialization, many elite including Elon Musk still have reservations about the capability of AI. There are good reasons to be so. Norman, a new algorithm created by a scientist at Massachusetts Institute of Technology, is the best example.

In June 2018, BBC reports that MIT team created Norman as part an experiment to see what training AI on data from the “dark corners of the net” would do to its worldview. Machine learning algorithm is a very important part of Artificial intelligence. People may say that AI algorithms can be biased and unaired, but the culprit is often not the algorithm itself, but the data that was fed to it. The same algorithm can see very different things in an image, if trained on the different data set. Norman was inspired by the fact that the data used to teach a machine learning algorithm can significantly influence its behavior. MIT trained Norman become a psychopath by only exposing it to macabre Reddit images of gruesome deaths and violence. Among the images the software was shown were people dying in horrible circumstances, etc. Then the researchers used “Rorschach’s inkblot tests” to check Norman’s response. The experimental results were disturbing.

Norman’s response can be found at http://norman-ai.mit.edu

Norman illustrates the dangers of Artificial Intelligence gone wrong. When biased data is used in machine learning algorithms, the entire AI is completely modified. It raises questions about machine learning algorithms making judgements that could have been affected by biased data.

Despite all those worries and open questions, we hope AI can still be used efficiently and effectively in daily life in the near future. There is still a long way to go before we are able to safely implement AI.

Tempus Magazine By Students, For Students

Tempus Magazine By Students, For Students